The LDT, a Perfect Home for All Your Kernel Payloads

Using the HIB segment to bypass KASLR on x86-based macOS

With the broad adoption of Kernel Address Space Layout Randomization (KASLR) by modern systems, obtaining an information leak is a necessary component of most privilege escalation exploits. This post will cover an implementation detail of XNU (the kernel used by Apple’s macOS) which can eliminate the need for a dedicated information leak vulnerability in many kernel exploits.

The key lies in the __HIB segment of the kernel Mach-O, containing a subset of functions and data structures for system hibernation and low-level CPU management, which is always mapped at a known address.

We will first provide a general overview of the __HIB segment and the various ways that it can be abused. Using our Pwn2Own 2021 kernel exploit as a real-world example, we show how this exploit could be simplified to use the general techniques described here removing the need for its previously tedious leak construction.

The __HIB Segment: Hibernation and Kernel Entry

The namesake of the __HIB segment derives from ‘hibernation’, and seemingly its primary purpose is to handle resuming the system from a hibernation image.

Its other primary functionality is to handle low-level entry and exit to/from the kernel, i.e. via interrupts or syscall. It houses the interrupt descriptor table (IDT) and the corresponding interrupt handler stubs.

It likewise contains the stubs for entry through the syscall and sysenter instructions. These entrypoints are set by writing the corresponding MSRs:

This snippet uses the DBLMAP macro to reference each function’s alias in the “dblmap,” a concept we’ll cover next.

The XNU dblmap

The dblmap is a region of virtual memory aliased to the same physical pages making up the __HIB segment.

The dblmap is initialized in doublemap_init(), which inserts virtual aliases into the page tables for all physical pages that make up the __HIB segment. The base of the dblmap is randomized with 8 bits of entropy.

The aliasing prevents a trivial KASLR bypass using the sidt instruction, which will reveal the kernel address of the IDT. This instruction is not a privileged instruction and will not cause an exception if executed in ring3 (usermode), unless User-Mode Instruction Prevention (UMIP) is enabled on the processor (it’s not in XNU):

User-Mode Instruction Prevention (bit 11 of CR4) — When set, the following instructions cannot be executed if CPL > 0: SGDT, SIDT, SLDT, SMSW, and STR. An attempt at such execution causes a general-protection exception (#GP).

This “leak” is known, as referenced by the following comment from the XNU source:

Since the IDT/GDT/LDT/etc are located in the dblmap alias, knowing their addresses in the dblmap does not reveal their addresses in the normal kernel mappings. They do, however, leak the dblmap base.

Mitigating Meltdown

An auxiliary purpose of the dblmap is that it functions as a Meltdown mitigation. The common technique adopted by the various operating systems was to remove kernel mappings from the page tables when executing userspace code, then re-map the kernel upon taking an interrupt/syscall/etc. Linux calls its implementation Kernel Page Table Isolation (KPTI), on Microsoft Windows the equivalent became known as KVA Shadow.

Both implementations are similar in that when executing user code, only a small subset of kernel pages are present in the page tables. This subset contains the code/data required to handle interrupts and syscalls and transition back and forth between user and kernel page tables. In the case of XNU, the “small subset” is precisely the dblmap.

In order to make the page table transition, the interrupt dispatch code needs to know the proper page table addresses to switch between. In other words, what value to write to cr3, the control register that holds the physical address of the top-level paging structure.

These values reside in a per-CPU data structure in one of the __HIB segment’s data sections:

cpshadows[cpu].cpu_ucr3: for userspace + dblmapcpshadows[cpu].cpu_shadowtask_cr3: for userspace + full kernel

Upon entry from userspace, the interrupt dispatch code will switch cr3 to cpu_shadowtask_cr3, then back to cpu_ucr3 before returning to userspace.

(Mostly) Mitigating Meltdown…

Since the dblmap is mapped into the page tables when executing user code, anything in the dblmap is, in theory, readable using Meltdown. This brings about the question of whether any “sensitive” data is present in the dblmap or not.

Most notably, there are several function pointers present as data, used to jump from the dblmap into normally-mapped kernel functions. Leaking any of these is sufficient to determine the kernel slide (an XNU term to describe the randomized kernel base address) and break KASLR.

It is interesting to note that KASLR can be similarly broken with Meltdown on both Windows and Linux:

Nice! FTR, Meltdown can still also be used on Linux to bypass KASLR by leaking some IDT entries. https://t.co/5NxuyfAXcQ pic.twitter.com/R2RXHmWoYc

— Andrey Konovalov (@andreyknvl) June 30, 2020

This same strategy works on fully up-to-date versions of macOS running vulnerable Intel hardware. Simply using libkdump to read from the dblmap works as-is. In short, Meltdown can be used to break KASLR on everything but the final generation of Intel-based Mac models, which shipped with Ice Lake processors containing CPU-level fixes for Meltdown.

While certainly an important and almost universal break of KASLR across Intel-based Macs, we will now discuss several other useful kernel exploit primitives provided by dblmap that apply to all generations including Ice Lake.

The LDT - Controlled Data at a Known Kernel Address

From an exploitation perspective, perhaps the most interesting artifact of the dblmap is that it holds the LDT for each CPU core, which can contain almost arbitrary data controllable from user mode, and is located at a fixed offset within the dblmap.

Review of LDT/GDT Mechanics

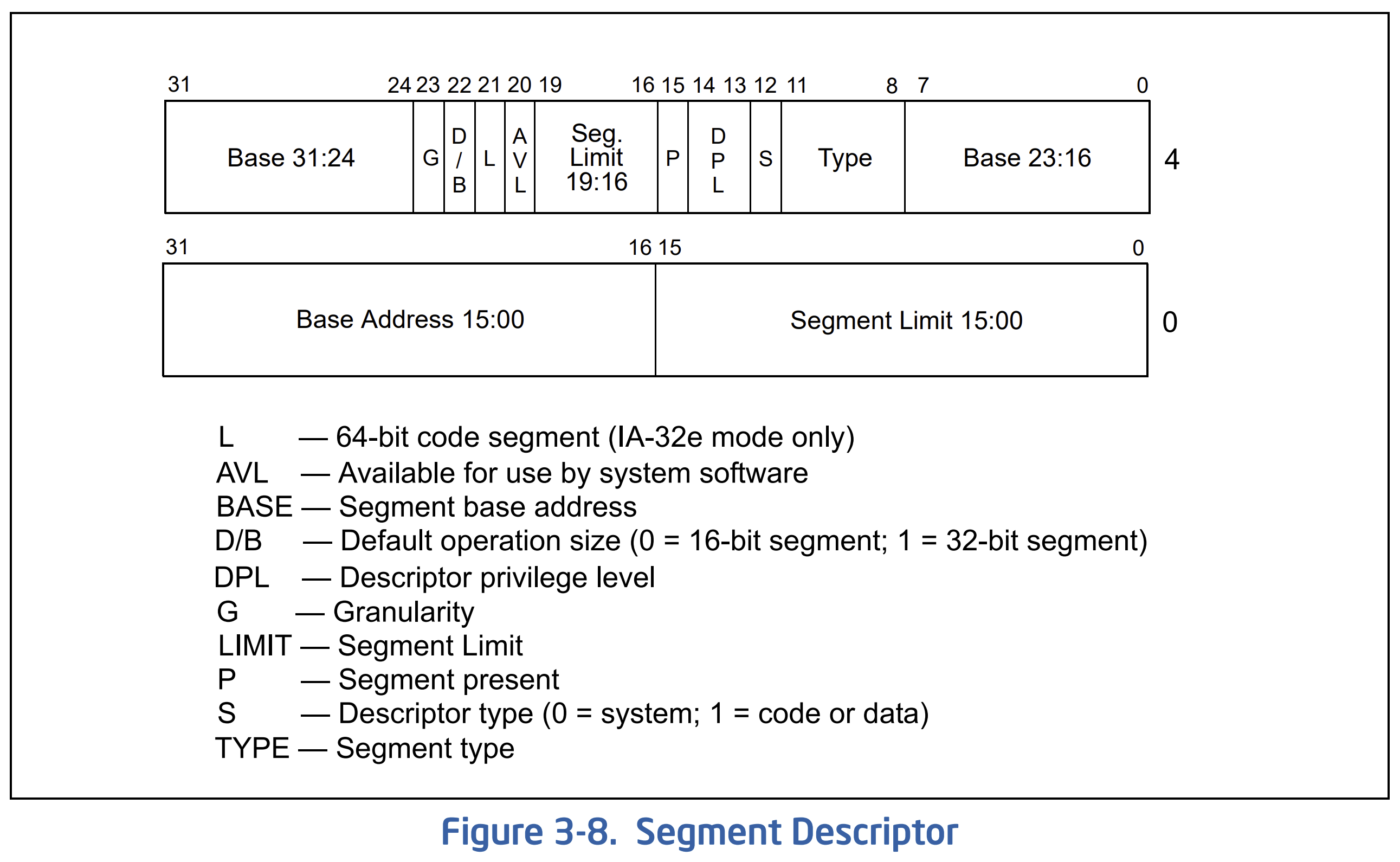

The LDT and GDT contain a number of segment descriptors. Each descriptor is either a system descriptor, or a standard code/data descriptor.

A code/data descriptor is an 8-byte structure describing a region of memory (specified by a base address and limit) with certain access rights (i.e. if it’s readable/writable/executable, and what privilege levels can access it). This is mostly a 32-bit protected mode concept; if executing 64-bit code, base addresses and limits are ignored. A descriptor can describe a 64-bit code segment, but the base/limit are not used.

From Intel manual volume 3A section 3.4.5

System descriptors are special, and most are expanded to 16-bytes to accomodate specifying 64-bit addresses. They can be one of the following types:

- LDT - specifies address and size of an LDT

- task-state segment (TSS) - specifies address of the TSS, a structure with info related to privilege level switching

- Call, interrupt, or trap gate - specifies an entry point for a far call, interrupt, or trap

The only system descriptors permitted in the LDT are call gates, which we’ll explore later on.

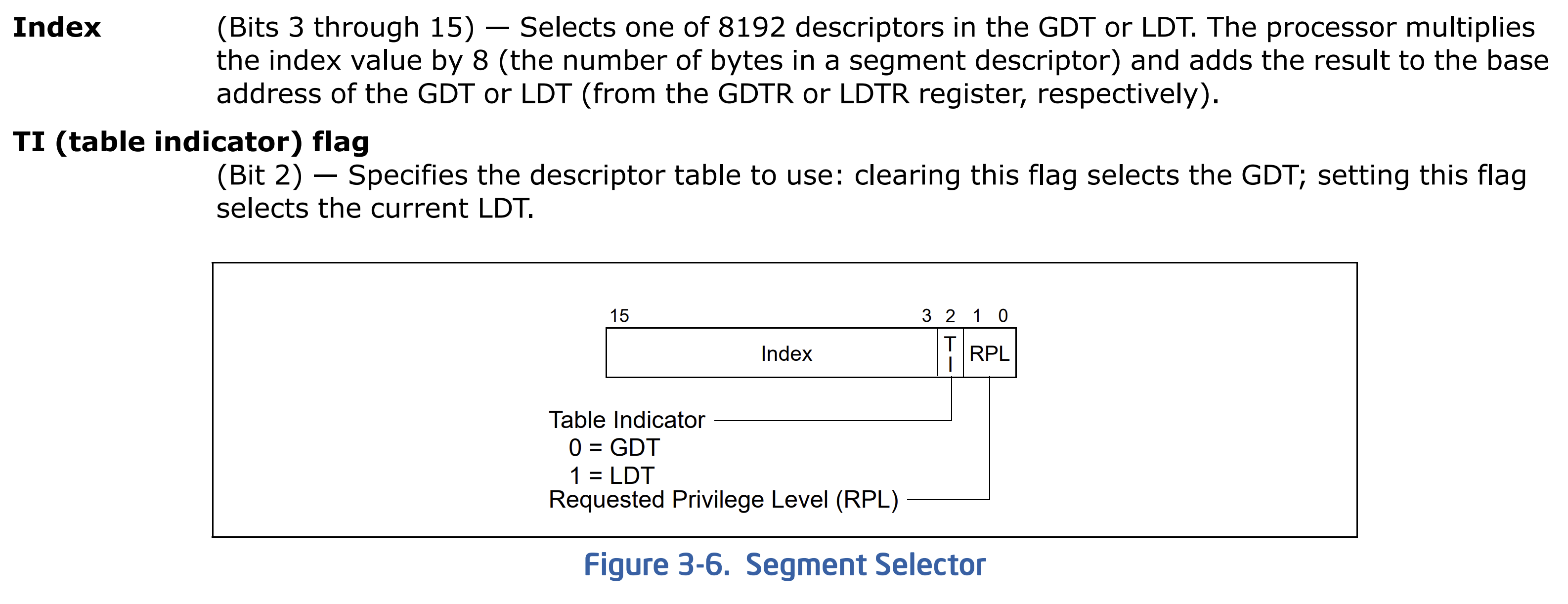

Segment descriptors are referenced with a 16-bit segment selector:

From Intel manual volume 3A section 3.4.2

For example, the cs register (code segment), is a selector referencing the segment for whatever code is currently executing. By setting up distinct descriptors in the GDT/LDT for 64-bit and 32-bit code, the OS can enable userspace jumping between protected mode (32-bit code) and long mode (64-bit code) through the use of the appropriate selectors. Specifically on macOS, the hardcoded selectors are:

0x2b: 5th GDT entry, ring3 – 64-bit user code0x1b: 3rd GDT entry, ring3 – 32-bit user code0x08: 1st GDT entry, ring0 – 64-bit kernel code0x50: 10th GDT entry, ring0 – 32-bit kernel code

LDT Mechanics on macOS

A process can set descriptors in its LDT from user mode by calling i386_set_ldt(). The corresponding kernel function this invokes is i386_set_ldt_impl().

This function imposes some constraints on what types of descriptors are allowed in the LDT. A descriptor must be empty, or specify a userspace 32-bit code/data segment:

In terms of the binary format, this translates to the following constraints on each 8-byte descriptor:

- byte 5 (

dp->access): must be either 0, 1, or have high nibble0xf(value matching0xf.) - byte 6 (

dp->granularity): bit 5 cannot be set (mask0x20)

Using the LDT to create fake kernel objects at a known kernel address, these constraints aren’t too restrictive. The only real problem is being able to construct valid kernel pointers within such an object.

To deal with this, we can misalign the fake object by an offset of 6 bytes. This will overlap dp->access with the high byte of the kernel pointer (which will be 0xff). The only constraint will then be that the low byte of the pointer (overlapped with dp->granularity) cannot have bit 5 set.

Successfully setting the LDT descriptors creates a heap-allocated LDT structure, a pointer to which is stored in the task->i386_ldt field of the current task structure.

Whenever a context switch occurs or the LDT is updated manually, the descriptors from this structure are copied by the kernel into the actual LDT (in the dblmap) by user_ldt_set().

A process can query its current LDT entries with i386_get_ldt(), invoking kernel function i386_get_ldt_impl(). This copies out descriptors directly from the actual LDT (in the dblmap), not the LDT struct.

Known Code at a Known Kernel Address

When writing a macOS kernel exploit without a kernel code address leak, the subset of functions in the __HIB text section can potentially serve as useful call targets.

Some notable functions (at fixed offsets) in the dblmap are:

memcpy(),bcopy()memset(),memset_word(),bzero()hibernate_page_bitset()- sets or clears a bit in a bitmap structurehibernate_page_bitmap_pin()- may copy a 32-bit int from 1st structure argument to 2nd pointer argumenthibernate_sum_page()- returns a 32-bit int from its 1st argument as an array, using the 2nd as the indexhibernate_scratch_read(),hibernate_scratch_write()- like memcpy, but 1st argument is a structure

The utility of these functions to a kernel exploit will be heavily dependent on the specific context when control flow is hijacked. Most of the kernel call targets listed above require 3 arguments. If a given vulnerability allows multiple controlled calls in sequence, you may also be able to use earlier calls to set registers/state for use in later calls.

Whatever the case may be, the dblmap can often be used to transform a simple kernel control flow hijack primitive into something that also produces a true kernel text (code address) leak.

Other Miscellaneous dblmap Tricks

A Truly Arbitrary 64-bit Value at a Known Kernel Address

We’ve already covered how the dblmap allows us to easily place almost arbitrary data in the LDT.

But say you need a truly arbitrary 64-bit value (maybe the function pointer you need has bit 5 set, and won’t meet the LDT constraints). When taking a syscall, the dispatch stub will save rsp (the userspace stack pointer) in a scratch location in the dblmap (cpshadows[cpu].cpu_uber.cu_tmp). The user rsp value will also be placed on the interrupt stack in the dblmap (either master_sstk for cpu 0, or scdtables[cpu]).

Although not its intended usage, rsp can be treated as a general-purpose register, and assigned an arbitrary 64-bit value. Barring the development inconvenience of needing to set/restore rsp before and after the syscall, this gives an arbitrary 64-bit value at a known address.

Leaking Kernel Pointers Into the LDT

Another useful aspect of the LDT is that it can be used as a writable location for a true kernel address leak, which can be queried and read out from user mode with i386_get_ldt(). Say you are able to use a hijacked function call to copy from an arbitrary address into the LDT. Copying one of the function pointers in the dblmap into the LDT, then querying the appropriate range of the LDT, will obtain a text leak.

Or as another example, perhaps the 1st argument to your hijacked function call is the fake object in the LDT, the 2nd is some valid C++ object, and the 3rd is any small enough integer. Calling memcpy() will copy the vtable (and some of the object’s fields) into the LDT. Querying the LDT then reads those leaks out to userspace.

There is one caveat with this technique, in that it can be racy. Remember that there is an LDT per CPU core, and there is no method on macOS to pin a process to any specific core. If a thread performs the hijacked function call, copying the leaks into its LDT, then gets preempted before it can call i386_get_ldt(), the leaks will effectively be lost.

Determining the Current CPU

While not really a “trick,” it’s necessary to identify the current CPU number in order to match up the right structure addresses in the dblmap. Each core has its own GDT (in the dblmap), so the sgdt instruction can be used to determine the current CPU. You can then busy loop until the “correct” GDT is seen.

One example of when this needs to be done is after populating the LDT with a payload, the task needs to execute on the right core before the payload will be present in that core’s LDT in the dblmap.

Using the dblmap to Simplify Real-World Exploits

To provide a concrete example that demonstrates the utility of the dblmap, we will show how our Pwn2Own 2021 kernel exploit could have been greatly simplified by using the dblmap instead of its overly complicated construction of several leak primitives. The source for this variation is available on GitHub along with the rest of the full Safari-to-Kernel chain.

The complete details of the bug/exploit were covered in a prior post, but it suffices to say that we’ll begin from a point in the exploit where:

- We have an arbitrary write into a freed kernel buffer

- We have no kernel information leaks

We can easily reclaim our freed kernel buffer with the backing storage of a new OSArray, which contains OSObject pointers. These are C++ objects, so corrupting a kernel data pointer in the array to point at a fake object should be sufficient to hijack control flow through a virtual call.

Thanks to the dblmap, we can place known data at a known kernel address. Meaning we can construct the fake kernel object (and its vtable) in the LDT without requiring a true kernel text or data leak.

(For simplicity, we select CPU 0 as the “right” CPU, whose LDT corresponds to the master_ldt symbol in the kernel)

Now that we are able to hijack kernel control flow, we need to investigate what the register state will be at the call, and how this can be turned into a useful invocation of a dblmap function.

Argument Control at the Hijacked Kernel Call Site

In this case, a copy of the corrupted OSArray will be made, and we are first able to hijack control in OSArray::initWithObjects(), which is passed the backing store containing corrupted objects. The corrupted backing store is iterated over, and the taggedRetain() virtual function is invoked for each object.

- The first argument to the call will naturally be the

thispointer, which will point at the fake object in the LDT - The second argument is the “tag,” which will be

&OSCollection::gMetaClass

There is no explicit third argument, but we can still look at how the function was compiled, and determine what may be in rdx (the third argument according to the calling convention) at the call site.

It happens to be used during a count++ operation, meaning it will be equal to the fake object’s index in the corrupted array plus one. In other words, if we corrupt the object at index i in the array, the third argument for its corresponding call will be i+1. This gives us control over the third argument, as long as it’s a relatively small nonzero integer.

Since the second argument, &OSCollection::gMetaClass, is an instance of OSMetaClass, a C++ object, its first field is a vtable. It would be relatively simple to call memcpy() with a third argument of 8, which would copy the vtable into the LDT. The vtable could then be read out with i386_get_ldt() as discussed previously, giving a text leak.

From there, we could use OSSerializer::serialize() to turn the call with a controlled this argument into an arbitrary function call with 3 controlled arguments (assuming the LDT constraints can be met). This would likely be sufficient to get enough leaks to remove dependence on the LDT and continue the exploit from there.

But let’s do something more interesting instead.

Setting Arbitrary Bits in the LDT

The signature for the hibernate_page_bitset() function is:

The second boolean argument, set, determines whether the function sets or clears a bit. It will be true in our case, since &OSCollection::gMetaClass is nonzero.

The third argument, page, specifies which bit to set or clear. As mentioned previously, we can make this any small nonzero integer.

The first argument, which will be our fake object in the LDT, has the following structure:

In short, it is a variably sized array of bitmaps, where each bitmap is associated with a range of bit indices.

Since we control the fake structure and the bit index, invoking this function allows us to set arbitrary bits in the LDT.

Note on Preemptions:

It should be noted that the memory contents of the LDT in the dblmap are ephemeral, in the sense that they become irrelevant if the current task (process/thread) is preempted.

When a new task starts executing on the CPU, if it has an LDT it will get copied into the dblmap, overwriting the existing corrupted contents. Likewise, when the preempted task (with previously corrupted LDT) is rescheduled, the LDT copied into the dblmap originates from the heap-allocated structure, effectively removing the corruption.

In our case we can mostly ignore this detail, since there won’t be any penalty or crash on failure, and we could re-perform the hijacked bitset calls if desired. Additionally, OSArray::initWithObjects() is iterating over the corrupted backing store in a loop, meaning we can hijack a virtual call for each slot in the array, and invoke the bitset function multiple times in one pass. We don’t need to go back and forth to userspace each time we set a bit, meaning less time elapsed, and the lower the chance our task is preempted.

Another option would be to corrupt one of the first 3 descriptors in an LDT. These are hardcoded and cannot be modified with i386_set_ldt():

Since they aren’t expected to change, these entries are not reset each time a new task executes on a CPU. Any corruptions to these 3 descriptors in any of the dblmap’s LDTs (one per CPU) will persist across preemptions.

Constructing a Call Gate

Setting bits in the LDT sounds powerful, but what can we actually do with it?

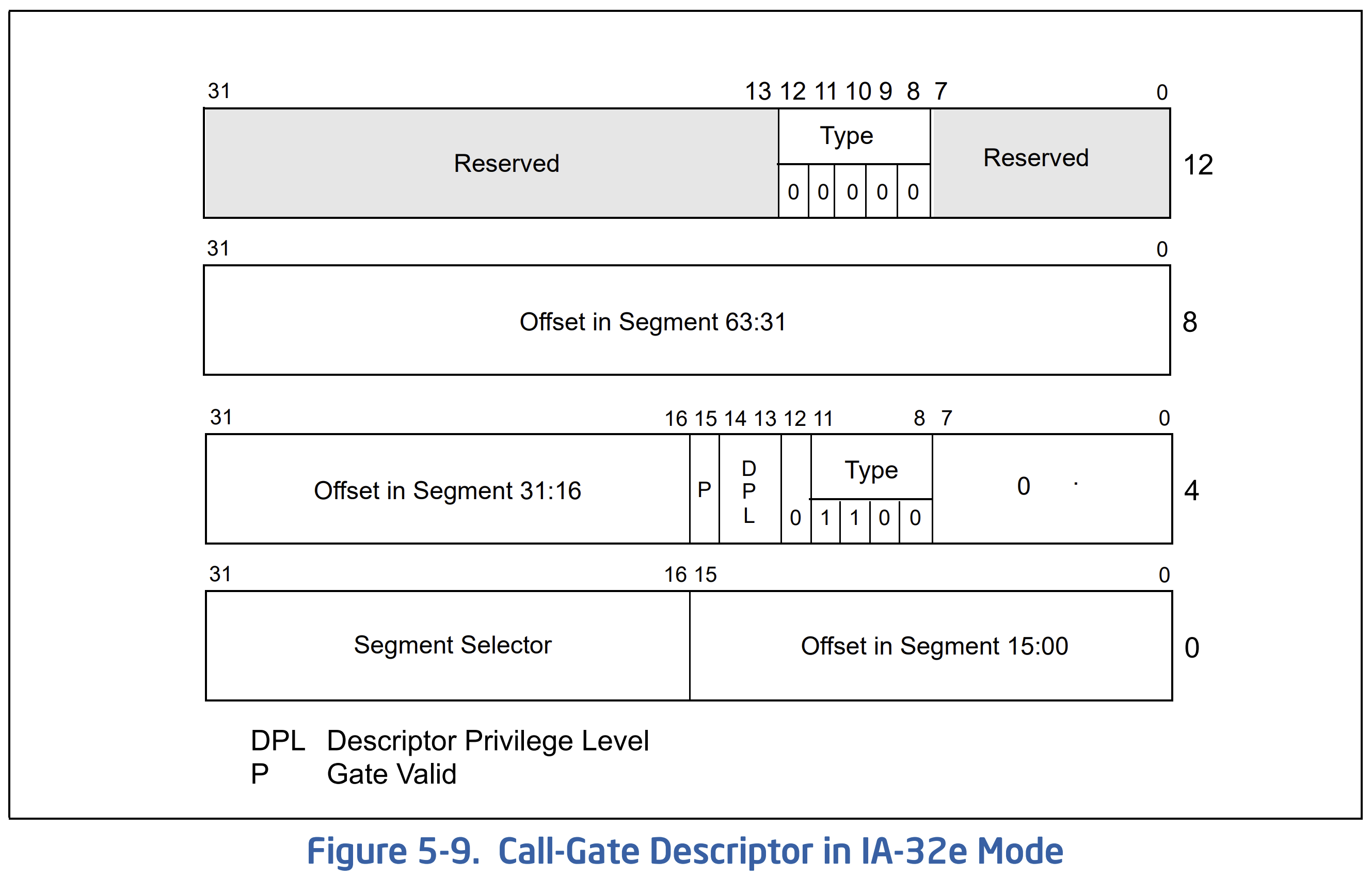

Remember that there are two types of descriptors: code/data descriptors for memory regions, and system descriptors. The only system descriptors permissible in the LDT are call gates. As the Intel manual states:

Call gates facilitate controlled transfers of program control between different privilege levels.

They are primarily defined by 3 things:

- the privilege level required to access the gate

- the destination code segment selector

- the destination entry point

The binary format:

From Intel manual volume 3A section 5.8.3.1

When making a far call through a call gate from userspace (ring3), roughly the following happens:

- Check privileges (the call gate descriptor’s DPL field must be 3)

- The destination code segment descriptor’s DPL field becomes the new privilege level

- Use the new privilege level to select a new stack pointer from the TSS

- Push the old

ssandrsponto the new stack - Push the old

csandriponto the new stack - Set

csandripfrom the call gate selector and entry point

Constructing a call gate that can be accessed by ring3 (DPL of 3) and specifies the code segment selector for 64-bit kernel code (0x8), will allow us to perform a far call to any address we specify, as ring0.

Abusing call gates for kernel exploitation has been demonstrated before, but in the context of 32-bit Windows, when SMEP, SMAP, and page table isolation weren’t concerns.

Leaking the Kernel Slide

When we trigger the far call, Supervisor Mode Execution Prevention (SMEP) prevents us from jumping to executable code in userspace. The page tables will still be in user mode, where the only mapped kernelspace pages are for the dblmap. This leaves the only option of jumping into existing code in the __HIB text section.

We’ll direct our far call into the middle of ks_64bit_return(), which contains the following instruction sequence:

mov r15, qword ptr [r15 + 0x48]

jmp ret64_iret

ret64_iret:

iretq

Remember that we have full register control (except for rsp, which will be the kernel stack), so the first instruction dereferencing r15 gives us an arbitrary read primitive. We simply set r15 appropriately for the address we want to read from, make the far call, and upon returning to userspace, r15 will contain the dereferenced data.

This can serve to leak one of the function pointers out of the dblmap, which would reveal the kernel slide. To be specific, we can leak the ks_dispatch() pointer from idt64_hndl_table0.

There is a slight caveat here, in that returning from a far call would usually be done with a far return instruction retf, not an iretq interrupt return. The stack layout will be slightly different than expected:

rflags will be set from the old rsp, while rsp will be populated with the old ss, and ss from whatever happens to be on the stack afterwards. This means that:

rflagscan be ‘restored’ by settingrspto therflagsvalue before making the far callrspbeing set from the oldssis a non-issue, we can just restorerspourselves

The next value on the stack loaded into ss will be a kernel pointer, which will be an invalid selector, and will trigger an exception. This exception however, will occur in ring3, after the interrupt return, so we can simply use intended Mach exception handling behavior to catch it.

This can be done using thread_set_exception_ports() to register an exception port for EXC_BAD_ACCESS. We spawn a thread to wait for the exception message, then respond with a message body containing the correct ss selector, allowing the far call thread to continue.

Control Page Tables, Control Everything

Not only does the kernel slide reveal the kernel base’s virtual address, it likewise reveals its physical address. This gives us the physical address of the LDT for CPU 0, or in other words, the physical address of controlled data.

This gives us an extremely powerful tool:

- If we reconstruct the call gate to jump to a

mov cr3instruction, we’ll immediately gain arbitrary ring0 code execution

All we need to do is make sure we set up our fake page tables (residing in the LDT) properly:

- The virtual address of the instruction following the

mov cr3should map to the physical address of shellcode smuggled into the LDT - The kernel stack should be mapped and writable (for CPU 0, this is symbol

master_sstkin the__HIBsegment) - Empirically unnecessary, but to be safe, the GDT should be mapped (for CPU 0, symbol

master_gdt)

Note that this is only true for CPU 0, which has its LDT statically allocated in the __HIB segment. The LDTs for other CPUs are allocated from the kernel heap, with virtual aliases inserted into the page tables for the dblmap. The physical addresses of these heap allocations cannot be directly inferred from the kernel slide.

Kernel Shellcode

With the constraints on the LDT descriptors, the shellcode we smuggle into the LDT will consist of chunks of 6 arbitrary bytes. If we use 2 bytes for a short jump (EB + offset), this leaves us chunks of 4 arbitrary bytes.

While in theory this is game over, it would be easier to obtain unconstrained shellcode execution in a state with “normal” page tables. In pursuit of this goal, a simple solution is to use our constrained shellcode to disable SMEP and SMAP (which are just bits in the CR4 register), then return back to userspace. We can then trigger a hijacked virtual kernel call as before, but this time jump to arbitrary shellcode in userspace.

A minor detail here is that control registers exist per CPU, so SMEP and SMAP would only be disabled on the CPU that executes the LDT shellcode.

Another implementation detail is how to go about cleanly returning to userspace, which would require restoring the original page tables. We do this by having the shellcode perform the following:

- Read

cpshadows[i].cpu_ucr3from the dblmap intorax - Modify the stack to form a valid

iretqlayout - Jump into

ks_64bit_return()where it performs a cr3 switch (fromrax) followed by aniretq

Conclusion

The concepts presented here highlight several powerful generalized techniques for macOS kernel exploits on Intel-based systems. We demonstrated how the dblmap can substantially weaken the efficacy of KASLR, provide several interesting kernel call targets, host smuggled kernel shellcode, and more.

These primitives were used in the practical exploitation of numerous kernel vulnerabilities we responsibly disclosed to Apple over the past year. Abusing core low-level constructs of the operating system can lead to very interesting consequences, and prove incredibly challenging to mitigate.

As linked previously, the source for a reference exploit using these techniques is available here.