Pwn2Own Automotive: CHARX Vulnerability Discovery

Abusing Subtle C++ Destructor Behavior for a UAF

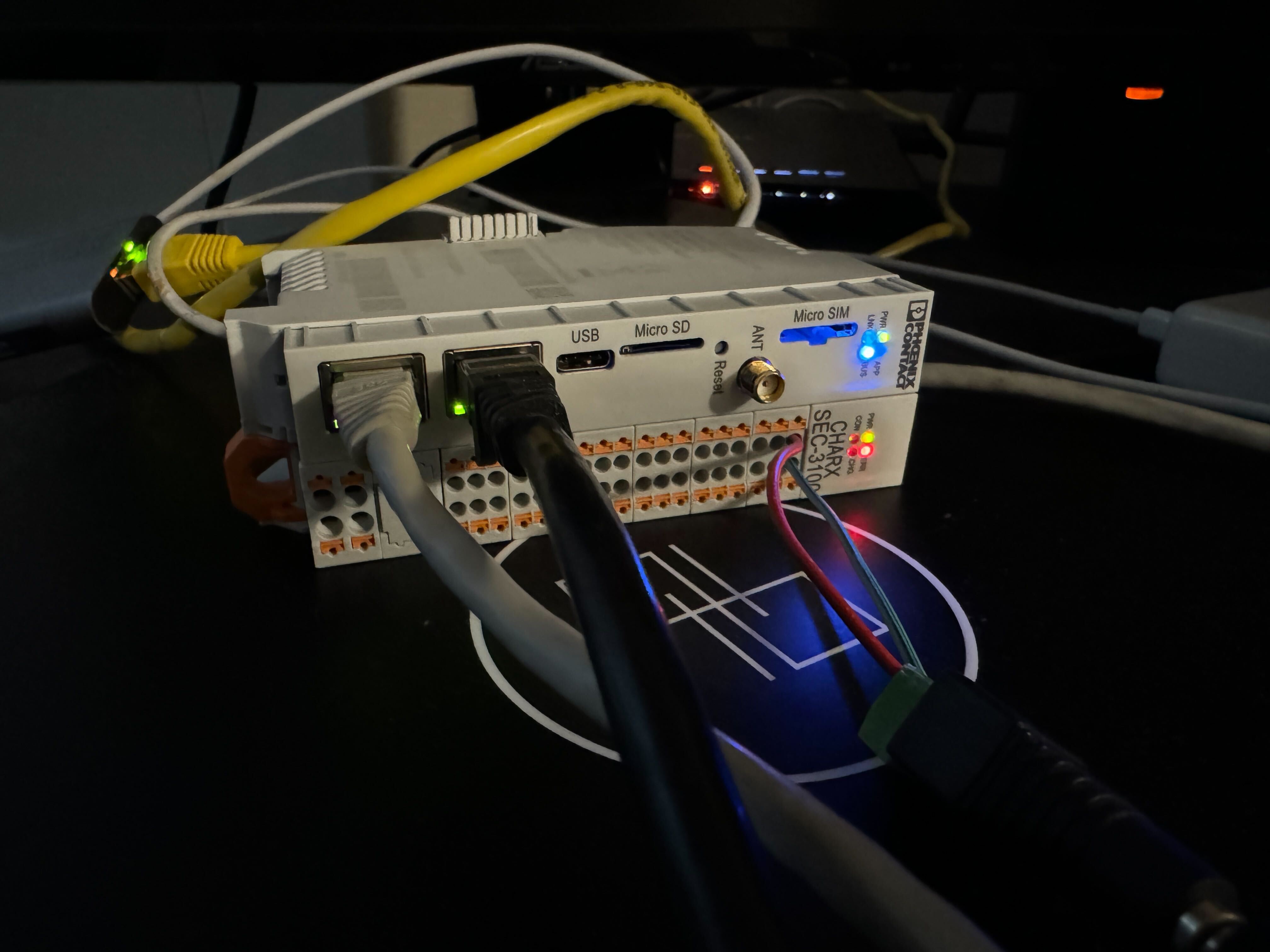

The first Pwn2Own Automotive introduced an interesting category of targets: electric vehicle chargers. This post will detail some of our research on the Phoenix Contact CHARX SEC-3100 and the bugs we discovered, with a 2nd separate post covering the actual exploit.

We’ve adapted the fundamental bug pattern into a challenge hosted on our in-browser WarGames platform here, if you want a hands-on attempt at exploiting the rather interesting C++ issue we discovered.

Although an EV charger may initially seem like an “exotic” target with non-standard protocols and physical interfaces, once those are figured out, everything eventually boils down to some binary consuming untrusted input (e.g. from the network), and all the classic memory corruption principles apply.

Why the CHARX?

The CHARX was an appealing target for two primary reasons. The first was simply how different it is as a product compared to the other chargers. While the rest of the targets seemed more retail / consumer facing, the CHARX is more “industrial,” a DIN-rail mounted unit seemingly more for infrastructure than actual charging. Its status as an outlier immediately piqued our interest.

Another more practical reason was that the firmware could be easily downloaded from the manufacturer’s website, and was not encrypted. The provided .raucb bundle is intended for use with rauc, but can also be treated as a squashfs filesystem image for mounting or extracting directly.

Recon - Mapping Attack Surface

Once we had decided to actively perform research against the CHARX, we began by enumerating and evaluating potential attack surface.

The CHARX runs a custom embedded version of Linux for 32-bit ARM. SSH is enabled by default, with the unprivileged user user-app having default password user.

In terms of physical ports, the two of interest to us were the two ethernet ports, labeled ETH0 and ETH1. ETH0 is intended to provide a connection to the “outside world,” most likely a larger network and/or the Internet, whereas ETH1 is intended to connect to the ETH0 port of an additional CHARX. In this manner, CHARX units can be daisy-chained such that they all communicate.

Firewall rules within /etc/firewall/rules define which ports (and therefore services) are accessible on these two interfaces. With these rules, some time poking around the system via ssh, and brief reverse engineering, we ended up with the following rough “map” of services, a guide indicating possible attack surface:

Some services can be interfaced with directly through their TCP servers, while several can only be addressed indirectly through MQTT messages. MQTT employs a publish-subscribe model where a client can subscribe to any number of topics, and when any client publishes a message to a topic, the message will be forwarded to all subscribers.

Most of the binaries for these services are located at /usr/sbin/Charx*. Most services are Cython based, where python code (with some extra syntax for native functionality) is compiled into native binaries / shared objects instead of being interpreted.

Reverse engineering Cython proved tedious, so we chose to focus mostly on the Controller Agent service, a native C++ binary.

Controller Agent Overview

The controller agent is represented towards the upper left of the attack-surface diagram, and is reachable over the eth1 port / interface. This port is intended to connect to an additional CHARX, but in our attack scenario we’ll be connecting a machine directly.

To provide some context, we came across three main functions of the controller agent:

- manage communication between other daisy-chained CHARX units

- manage the AC controller (a separate MCU on the board)

- V2G (vehicle-to-grid) protocol messaging (related to vehicles selling electricity back to the grid)

In terms of actual interaction, the agent can be talked to over UDP, TCP, and the HomePlug Green PHY protocol. We’ll give a brief overview of each communication channel, and discuss specifics later as they become relevant.

TCP JSON Messaging

The TCP server is conceptually the simplest method of communication. The agent listens on port 4444, accepts messages in JSON format, and provides JSON responses.

Each message is a JSON object with the following format:

The deviceUid field specifies the target device in a “device tree” of sorts maintained by the agent. For our purposes, this will mostly be root to indicate the controller agent itself, but there is also a device node representing the AC controller MCU, and there would be other nodes for daisy-chained units if they existed and had performed the proper “handshake.”

Some of the supported operations are:

deviceInfo: obtain info for specified devicechildDeviceList: list children in device treedataAccess: generic hardware data e.g. reading temperature of AC controller (unsupported by root agent)configAccess: read/write configuration variablesheartbeatv2gMessage: proxies / handles V2G messages / responses

If the target device is the agent itself, the message is handled directly. Otherwise it gets forwarded to the proper device (e.g. proxied to a daisy-chained CHARX).

UDP Broadcast Discovery

UDP is primarily used for autodiscovery of daisy-chained units, after which communication would occur over TCP. This is done with UDP broadcast packets on port 4444.

The basic idea is:

- root agent broadcasts a

deviceInfoJSON request message - daisy-chained sub-agent responds

- root agent gets IP from response, uses it to connect to sub-agent over TCP port 4444

There isn’t much complexity here, since it’s simply for initial discovery.

HomePlug

HomePlug is a family of protocols for powerline communications (PLC). That is, transmitting data over electrical wiring. Specifically, the HomePlug Green PHY protocol is the one relevant here.

The protocol is defined in terms of standard ethernet packets. In practice, a dedicated SoC (e.g. some Qualcomm chip) would perform the translation of ethernet packets into raw powerline signals, and vice versa.

It would seem these chips are present on certain CHARX models (although not the 3100 model we had for the contest), intended to be exposed to Linux userspace as interface eth2 (compared to the physical ethernet ports for eth0 and eth1).

The usage of PLC is interesting and provides some background, but is ultimately irrelevant, since the protocol is just ethernet, and we only need to concern ourselves with sending / receiving raw packets. Ethernet / layer-2 packets have a 10-byte header followed by the data payload.

Notably, the 16-bit EtherType field in the header determines the protocol, which in the case of HomePlug Green PHY would be 0x88e1.

The controller agent sends and receives these packets by opening a raw socket:

socket(AF_PACKET, SOCK_RAW, htons(0x88e1))

Reading or writing to the raw socket sends or receives an entire raw packet, including the header. The indicated protocol 0x88e1 means when reading from the socket, the kernel will only deliver packets with the specified EtherType.

The raw socket is bound to an interface, to and from which packets are routed directly. Normally this would be the special eth2 interface for PLC, but the interface can be configured via a configAccess message (over TCP) prior to starting the HomePlug “server.” We can conveniently set this to eth1 (for the physical ETH1 port), to which we’ll already be connected.

The HomePlug functionality is closely related to V2G, and the HomePlug “server” is started by sending a v2gMessage request over TCP, with a “subscribe” method type.

Bug #1: HomePlug Parsing Mismatch

The first vulnerability we used ends up causing a simple null dereference, allowing us to crash the service at will. This may seem useless at first, but will prove its usefulness later on.

The HomePlug “server” run by the controller agent reads packets from its raw socket, and handles each one. HomePlug packets are called MMEs (management message entries), and have a 5-byte header followed by the message payload:

- 1-byte Version

- 2-byte MMTYPE for the message type, i.e. an “opcode”

- 2-byte fragmentation info (unused by the agent)

Note that rather than being a full implementation, the agent implements only a subset of the features / MMTYPEs of the Green PHY protocol (for instance, ignoring fragmentation info). You can find an archived version of the full spec here.

For context, message opcodes commonly come in send / respond pairs. From the spec, the naming scheme follows:

Request messages always end in .REQ. The response (if any) to a Request message is always a Confirmation message, which ends in .CNF.

Indication messages always end in .IND. The response (if any) to an Indication message is always a Response message, which ends in .RSP.

The MMTYPE of interest here is CM_AMP_MAP.REQ (0x601c), which is used to send an “amplitude map.” The message payload is of the form:

- 2-byte AMLEN indicating the size of the following array of 4-bit numbers

- n-byte AMDATA of length

(AMLEN+1)/2

The agent represents MMEs as subclasses of an MMEFrame class, which for this MMTYPE would be MME_CM_Amp_Map_Req.

To parse the various message payloads, which all have different structures, MMEFrame objects use the concept of what I’ve denoted “blobs,” which are chunks of the message body copied out into

separate vectors, and tagged with a “type” indicating which field they represent. Parsing populates blobs, MME handling queries / uses the blobs.

The following is pseudocode of the constructor for MME_CM_Amp_Map_Req, which is passed a pointer to the start of the MME (including the 5-byte header):

Remember that the header is 5 bytes, so the message payload should start at offset 5. Given that AMLEN is the first field in that payload, AMLEN should be bytes 5 and 6.

However, this constructor erroneously uses bytes 4 and 5. This incorrect value determines the length of the AMDATA blob stored for later.

The “correct” AMLEN is also stored as a blob.

What we end up with is the “correct” length in the AMLEN blob, but an AMDATA blob with a completely different size.

To see what this “weird state” can lead to, let’s see what happens after parsing. Rough pseudocode of the handler for this MMTYPE is shown below. It essentially copies AMLEN entries in a loop from the AMDATA blob into a “session-local” vector:

The number of loop iterations uses the “correct” AMLEN, however the AMDATA blob being iterated over is not actually that size!

If it’s smaller, AMDATA[i] may be out of bounds.

Now, you may be thinking…

Hold up, I was expecting a meager null deref… this looks more like an out-of-bounds read!

This is technically an out-of-bounds read, and initially seemed promising for an information leak. However, while there did seem to exist code for echoing the “session-local” vector back over the wire, we unfortunately could not find any xrefs or code paths able to actually trigger it. Instead, as a consolation prize, we can utilize the fact that a std::vector of size 0 will have a null pointer for its backing store, and attempting to read from this vector during the loop causes a null dereference.

However, the SIGSEGV from the null dereference isn’t necessarily the end of the line for the process, which brings us to our next bug…

Bug #2: Use-After-Free on Process Teardown

The second vulnerability we leveraged was a UAF that occurred during cleanup before process exit, which we discovered mostly by accident. Sometimes in vulnerability research, you spend weeks staring at code to no avail (which we initially did, finding the HomePlug bug). Other times, you simply attach gdb, continue after a few seconds, and magically get a segfault…

The reason this was happening was some sort of system monitor was detecting the service had hung (due to being paused in gdb). The monitor then sent SIGTERM to the process, with the intent of shutting it down cleanly, and restarting the service afterwards. However, some bug was being triggered “organically” during the exit handlers.

Exit Handlers

In the CharxControllerAgent binary, a considerable number of exit handlers are registered by __aeabi_atexit, which seems to be implicitly emitted by the C++ compiler to destruct globals declared as static.

Since static variables are constructed once but stay alive indefinitely, the C++ runtime registers exit handlers to ensure their destruction.

The most relevant static global is a ControllerAgent object, a massive root object for encapsulating nearly all of the agent’s state. This is initially constructed in main, where the destructor is also registered as an exit handler.

On a related note, the agent installs several signal handlers as well. For SIGTERM and SIGABRT, the handler sets a global boolean indicating the main run-loop should stop, cleanly returning from main. For SIGSEGV, the handler manually invokes exit(1). Consequently, delivery of any of these signals ends up triggering exit handlers.

In other words, our previously useless null dereference can be used to invoke the SIGSEGV signal handler, which calls exit and will end up triggering the exit-handling bug! Let’s take a look at what the actual issue turned out to be…

Destructors Considered Harmful

Before going into the CHARX-specific details, we’ll demonstrate the same bug pattern using a simple toy example, which will be easier to reason about.

See if you can spot the bug in the following code…

Consider what occurs when returning from main. This will end up invoking the destructor for Outer, which would have been registered as an exit handler after construction.

But, what happens during this destructor? It’s not explicitly defined, so it will be whatever default destructor the C++ compiler creates.

According to the C++ Reference:

… the compiler calls the destructors for all non-static non-variant data members of the class, in reverse order of declaration …

In other words, for Outer, the vector is destructed before the inner class. This leads to the following chain of events when destructing Outer:

This is a very subtle bug, mostly caused by the implicit nature of C++, combined with the pattern of an inner class calling back into the outer class during destruction.

An interesting consequence of this implicitness is that simply switching the two lines declaring the members inner and values “patches” the bug, since the destructors would then be called in the opposite order.

The ControllerAgent destructor

The actual bug follows this same pattern. Almost all of the controller agent’s global structures / state are rooted in a ControllerAgent class instance. In turn, this object’s destructor performs

most of the program’s cleanup. As mentioned, this destructor is registered as an exit handler.

One of the ControllerAgent fields is a std::list<ClientSession>, a list of “sessions” each representing a connected client.

This is the std::vector analog of our toy example.

Another field is a “manager” ClientConnectionManagerTcp, which internally holds a list of ClientConnectionTcp objects representing TCP clients.

This is the inner analog of our toy example.

These two lists are conceptually one-to-one, where each lower-level ClientConnectionTcp has a corresponding higher-level ClientSession. An integral “connection ID” associates each object with the other.

When the lower-level TCP connection is closed, the “manager” (ClientConnectionManagerTcp) cleans up both objects. It owns the lower-level object and can perform the cleanup itself, but to clean up the higher-level object, it calls back into a ControllerAgent function to notify it that the matching ClientSession should be invalidated. This involves iterating through the std::list<ClientSession> looking for the matching ID.

However, this breaks during destruction, since the std::list<ClientSession> gets destructed before ClientConnectionManagerTcp:

~ControllerAgentkicks off cleanup~std::list<ClientSession>frees all linked list nodes- this is most likely the default standard-library-defined destructor

~ClientConnectionManagerTcpstarts cleaning up lower-level TCP connections- calls back into

ControllerAgentto invalidate a connection ID ControllerAgentattempts to search itsstd::list<ClientSession>for the matching ID- in this half-destructed state, this

std::listis already gone… UAF!

- calls back into

Next Up: Exploitation

At this point, we have a UAF primitive, with the caveat that it can be triggered just once on process exit (which we can initiate at will with the null dereference).

We found the very subtle destructor ordering issue quite interesting, as an example of how the implicit nature of C++ can lead to unexpected and easy-to-miss vulnerabilities. It’s similarly common to overlook bugs only occurring on process exit.

The exploitation process is covered in a follow-up post.

Also, if you want to take a crack at exploiting the same core bug pattern (but with ASLR disabled!), check out this challenge on our in-browser WarGames platform.

For reference, the ZDI advisories / CVE assignments are listed here:

- HomePlug null dereference: CVE-2024-26003, ZDI-24-860

- Destructor UAF: CVE-2024-26005, ZDI-24-861