Cracking the Walls of the Safari Sandbox

Fuzzing the macOS WindowServer for Exploitable Vulnerabilities

When exploiting real world software or devices, achieving arbitrary code execution on a system may only be the first step towards total compromise. For high value or security conscious targets, remote code execution is often succeeded by a sandbox escape (or a privilege escalation) and persistence. Each of these stages usually require their own entirely unique exploits, making some weaponized zero-days a ‘chain’ of exploits.

Considered high risk consumer software, modern web browsers use software sandboxes to contain damage in the event of remote compromise. Having exploited Apple Safari in the previous post, we turn our focus towards escaping the Safari sandbox on macOS in an effort to achieve total system compromise.

As the fifth blogpost of our Pwn2Own series, we will discuss our experience evaluating the Safari sandbox on macOS for security vulnerabilities. We will select a software component exposed to the sandbox, and utilize Frida to build an in-process fuzzer as a means of discovering exploitable vulnerabilities.

Software Sandboxes

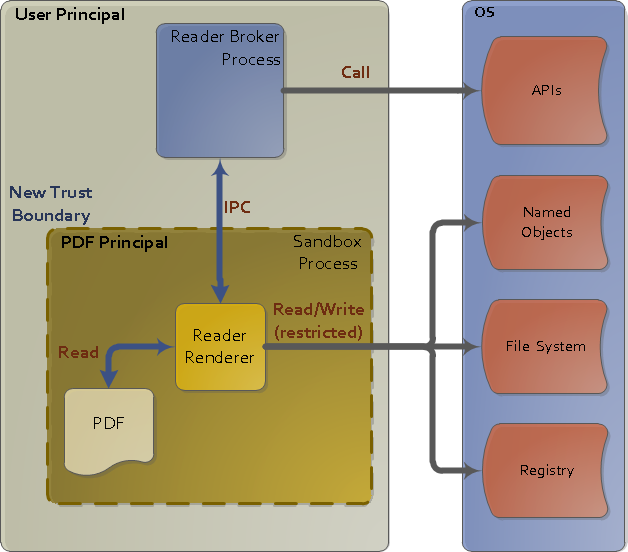

Software sandboxing is often accomplished by restricting the runtime privileges of an application through platform-dependent security features provided by the operating system. When layered appropriately, these security controls can limit the application’s ability to communicate with the broader system (syscall filtering, service ACLs), prevent it from reading/writing files on disk, and block external resources (networking).

Tailored to a specific application, a sandbox will aggressively reduce the system’s exposure to a potentially malicious process, preventing the process from making persistent changes to the machine. As an example, a compromised but sandboxed application cannot ransomware user files on disk if the process was not permitted filesystem access.

A diagram of the old Adobe Reader Protected Mode Sandbox, circa 2010

Over the past several years we have seen sandboxes grow notably more secure in isolating problematic software. This has brought about discussion regarding the value of a theoretically perfect software sandbox: when properly contained does it really matter if an attacker can gain arbitrary code execution on a machine?

The answer to this question has been hotly debated amongst security researchers. This discussion has been further aggravated by the contrasting approach to browser security taken by Microsoft Edge versus Google Chrome. Where one leads with in-process exploit mitigations (Edge), the other is a poster child for isolation technology (Chrome).

Mitigation bypasses can become class breaks. Layers of isolation reliably increase attack cost roughly linearly.https://t.co/nvp8yKjTjW

— Dino A. Dai Zovi (@dinodaizovi) February 28, 2017

As a simple barometer, the Pwn2Own results over the past several years seem to indicate that sandboxing is winning when put toe-to-toe against advanced in-process mitigations. There are countless opinions on why this may be the case, and whether this trend holds true for the real world.

To state it plainly, as attackers we do think that sandboxes (when done right) add considerable value towards securing software. More importantly, this is an opinion shared by many familiar with attacking these products.

However, as technology improves and gives way to mitigations such as strict control flow integrity (CFI), these views may change. The recent revelations wrought by Meltdown & Spectre is a great example of this, putting cracks into even the theoretically perfect sandbox.

At the end of the day, both sandboxing and mitigation technologies will continue to improve and evolve. They are not mutually exclusive of each other, and play an important role towards raising the costs of exploitation in different ways.

Isolation vs mitigation is a false choice.

— Mudge (@dotMudge) February 28, 2017

You want both.

Edge and Chrome are good exemplars heading down this path in different ways.

macOS Sandbox Profiles

On macOS, there is a powerful low-level sandboxing technology called ‘Seatbelt’ which Apple has deprecated (publicly) in favor of the higher level ‘App Sandbox’. With little-to-no official documentation available, information on how to use the former system sandbox has been learned through reverse engineering efforts by the community (1,2,3,4,5, …).

To be brief, the walls of Seatbelt-based macOS sandboxes are built using rules that are defined in a human-readable sandbox profile. A few of these sandbox profiles live on disk, and can be seen tailored to the specific needs of their specific application.

For the Safari browser, its sandbox profile is comprised of the following to files (locations may vary):

/System/Library/Sandbox/Profiles/system.sb/System/Library/StagedFrameworks/Safari/WebKit.framework/Versions/A/Resources/com.apple.WebProcess.sb

The macOS sandbox profiles are written in a language called TinyScheme. Profiles are often written as a whitelist of actions or services required by the application, disallowing access to much of the broader system by default.

...

(version 1)

(deny default (with partial-symbolication))

(allow system-audit file-read-metadata)

(import "system.sb")

;;; process-info* defaults to allow; deny it and then allow operations we actually need.

(deny process-info*)

(allow process-info-pidinfo)

...

For example, the sandbox profile can whitelist explicit directories or files that the sandboxed application should be permitted access. Here is a snippet from the WebProceess.sb profile, allowing Safari read-only access to certain directories that store user preferences on disk:

...

;; Read-only preferences and data

(allow file-read*

;; Basic system paths

(subpath "/Library/Dictionaries")

(subpath "/Library/Fonts")

(subpath "/Library/Frameworks")

(subpath "/Library/Managed Preferences")

(subpath "/Library/Speech/Synthesizers")

...

Serving almost like horse blinders, sandbox profiles help focus our attention (as attackers) by listing exactly what non-sandboxed resources we can interface with on the system. This helps enumerate relevant attack surface that can be probed for security defects.

Escaping Sandboxes

In practice, sandbox escapes are often their own standalone exploit. This means that an exploit to escape the browser sandbox is almost always entirely unique from the exploit used to achieve initial remote code execution.

When escaping software sandboxes, it is common to attack code that executes outside of a sandboxed process. By exploiting the kernel or an application (such as a system service) running outside the sandbox, a skilled attacker can pivot themselves into a execution context where there is no sandbox.

The Safari sandbox policy explicitly whitelists a number of external software attack surfaces. As an example, the policy snippet below highlights a number of IOKit interfaces which can be accessed from the sandbox. This is because they expose system controls that are required by certain features in the browser.

...

;; IOKit user clients

(allow iokit-open

(iokit-user-client-class "AppleMultitouchDeviceUserClient")

(iokit-user-client-class "AppleUpstreamUserClient")

(iokit-user-client-class "IOHIDParamUserClient")

(iokit-user-client-class "RootDomainUserClient")

(iokit-user-client-class "IOAudioControlUserClient")

...

Throughout the profile, entries that begin with iokit-* refer to functionality we can invoke via an IOKit framework. These are the userland client (interfaces) that one can use to communicate with their relevant kernel counterparts (kexts).

Another interesting class of rules defined in the sandbox profile fall under allow mach-lookup:

...

;; Remote Web Inspector

(allow mach-lookup

(global-name "com.apple.webinspector"))

;; Various services required by AppKit and other frameworks

(allow mach-lookup

(global-name "com.apple.FileCoordination")

(global-name "com.apple.FontObjectsServer")

(global-name "com.apple.PowerManagement.control")

(global-name "com.apple.SystemConfiguration.configd")

(global-name "com.apple.SystemConfiguration.PPPController")

(global-name "com.apple.audio.SystemSoundServer-OSX")

(global-name "com.apple.analyticsd")

(global-name "com.apple.audio.audiohald")

...

The allow mach-lookup keyword depicted above is used to permit the sandboxed application access to various remote procedure call (RPC)-like servers hosted within system services. These policy definitions allow our application to communicate with these whitelisted RPC servers over the mach IPC.

Additionally, there are some explicitly whitelisted XPC services:

...

(deny mach-lookup (xpc-service-name-prefix ""))

(allow mach-lookup

(xpc-service-name "com.apple.accessibility.mediaaccessibilityd")

(xpc-service-name "com.apple.audio.SandboxHelper")

(xpc-service-name "com.apple.coremedia.videodecoder")

(xpc-service-name "com.apple.coremedia.videoencoder")

...

XPC is higher level IPC used to facilitate communication between processes, again built on top of the mach IPC. XPC is fairly well documented, with a wealth of resources and security research available for it online (1,2,3,4, …).

There are a few other interesting avenues of attacking non-sandboxed code, including making syscalls directly to the XNU kernel, or through IOCTLs. We did not spend any time looking at these surfaces due to time.

Our evaluation of the sandbox was brief, so our knowledge and insight only extends so far. A more interesting exercise for the future would be to enumerate attack surface that currently cannot be restrained by sandbox policies.

Target Selection

Having surveyed some of the components exposed to the Safari sandbox, the next step was to decide what we felt would be easiest to target as a means of escape.

Attacking components that live in the macOS Kernel is attractive: successful exploitation guarantees not only a sandbox escape, but also unrestricted ring-zero code execution. With the introduction of ‘rootless’ in macOS 10.11 (El Capitan), a kernel mode privilege escalation is necessary to do things such as loading unsigned drivers without disabling SIP.

The cons of attacking kernel code comes at the cost of debuggability and convenience. Tooling to debug or instrument kernel code is primitive, poorly documented, or largely non-existent. Reproducing bugs, analyzing crashes, or stabilizing an exploit often require a full system reboot which can be taxing on time and morale.

After weighing these traits and reviewing public research on past Safari sandbox escapes, we zeroed in on the WindowServer. A complex usermode system service that was accessible to the Safari sandbox over the mach IPC:

(allow mach-lookup

...

(global-name "com.apple.windowserver.active")

...

)

For our purposes, WindowServer appeared to be nearly an ideal target:

- Nearly every process can communicate with it (Safari included)

- It lives in userland, simplifying debugging and introspection

- It runs with permissions essentially equivalent to root

- It has a relatively large attack surface

- It has a notable history of security vulnerabilities

WindowServer is a closed-source, private framework (a library) which implies developers are not meant to interface with it directly. This also means that official documentation is non-existent, and what little information is available publicly is thin, dated, or simply incomplete.

WindowServer Attack Surface

WindowServer works by processing incoming mach_messages from applications running on the system. On macOS, mach_messages are a form of IPC to enable communication between running processes. The Mach IPC is generally used by system services to expose a RPC interface for other applications to call into.

Under the hood, virtually every GUI macOS application transparently communicates with the WindowServer. As hinted by its name, the WindowServer system service is responsible for actually drawing application windows to the screen. A running application will tell the WindowServer (via RPC) what size or shape to make the window, and where to put it:

For those familiar with Microsoft Windows, the macOS WindowServer is a bit like a usermode Win32k, albeit less-complex. It is also responsible for drawing the mouse cursor, managing hotkeys, and facilitating some cross-process communication (among other many other things).

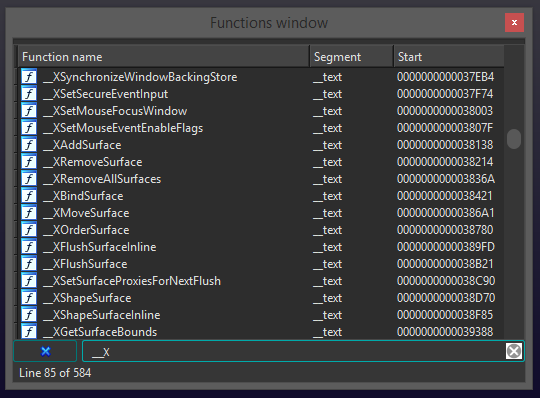

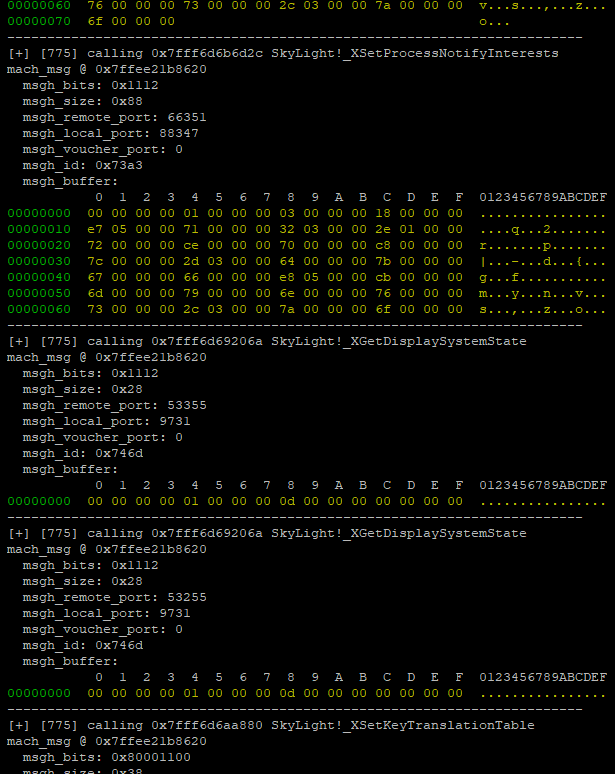

Applications can interface with the WindowServer over the mach IPC to reach some 600 RPC-like functions. When the privileged WindowServer system service receives a mach_message, it will be routed to its respective message handler (a ‘remote procedure’) coupled with foreign data to be parsed by the handler function.

As an attacker, these functions prefixed with _X... (such as _XBindSurface) represent directly accessible attack surface. From the Safari sandbox, we can send arbitrary mach messages (data) to the WindowServer targeting any of these functions. If we can find a vulnerability in one of these functions, we may be able to exploit the service.

We found that these 600 some handler functions are split among three MIG-generated mach subsystems within the WindowServer. Each subsystem has its own message dispatch routine which initially parses the header of the incoming mach messages and then passes the message specific data on to its appropriate handler via indirect call:

RAX is a code pointer to a message handler function that is selected based on the incoming message id

The three dispatch subsystems make for an ideal place to fuzz the WindowServer in-process using dynamic binary instrumentation (DBI). They represent a generic ‘last hop’ for incoming data before it is delivered to any of the ~600 individual message handlers.

Without having to reverse engineer any of these surface level functions or their unique message formats (input), we had discovered a low-cost avenue to begin automated vulnerability discovery. By instrumenting these chokepoints, we could fuzz all incoming WindowServer traffic that can be generated through normal user interaction with the system.

In-process Fuzzing With Frida

Frida is a DBI framework that injects a JavaScript interpreter into a target process, enabling blackbox instrumentation via user provided scripts. This may sound like a bizarre use of JavaScript, but this model allows for rapid prototyping of near limitless binary introspection against compiled applications.

We started our Frida fuzzing script by defining a small table for the instructions we wished to hook at runtime. Each of these instructions were an indirect call (eg, call rax) within the dispatch routines covered in the previous section.

// instructions to hook (offset from base, reg w/ call target)

var targets = [

['0x1B5CA2', 'rax'], // WindowServer_subsystem

['0x2C58B', 'rcx'], // Renezvous_subsystem

['0x1B8103', 'rax'] // Services_subsystem

]

The JavaScript API provided by Frida is packed with functionality that allow one to snoop on or modify the process runtime. Using the Interceptor API, it is possible to hook individual instructions as a place to stop and introspect the process. The basis for our hooking code is provided below:

function InstallProbe(probe_address, target_register) {

var probe = Interceptor.attach(probe_address, function(args) {

var input_msg = args[0]; // rdi (the incoming mach_msg)

var output_msg = args[1]; // rsi (the response mach_msg)

// extract the call target & its symbol name (_X...)

var call_target = this.context[target_register];

var call_target_name = DebugSymbol.fromAddress(call_target);

// ready to read / modify / replay

console.log('[+] Message received for ' + call_target_name);

// ...

});

return probe;

}

To hook the instructions we defined earlier, we first resolved the base address of the private SkyLight framework that they reside in. We are then able to compute the virtual addresses of the target instructions at runtime using the module base + offset. After that it is as simple as installing the interceptors on these addresses:

// locate the runtime address of the SkyLight framework

var skylight = Module.findBaseAddress('SkyLight');

console.log('[*] SkyLight @ ' + skylight);

// hook the target instructions

for (var i in targets) {

var hook_address = ptr(skylight).add(targets[i][0]); // base + offset

InstallProbe(hook_address, targets[i][1])

console.log('[+] Hooked dispatch @ ' + hook_address);

}

During the installed message intercept, we now had the ability to record, modify, or replay mach message contents just before they are passed into their underlying message handler (an _X... function). This effectively allowed us to man-in-the-middle any mach traffic to these MIG subsystems and dump their contents at runtime:

From this point, our fuzzing strategy was simple. We used our hooks to flip random bits (dumb fuzzing) on any incoming messages received by the WindowServer. Simultaneously, we recorded the bitflips injected by our fuzzer to create ‘replay’ log files.

Replaying the recorded bitflips in a fresh instance of WindowServer gave us some degree of reproducibility for any crashes produced by our fuzzer. The ability to consistently reproduce a crash is priceless when trying to identify the underlying bug. A sample snippet of a bitflip replay log looked like the following:

...

{"msgh_bits":"0x1100","msgh_id":"0x7235","buffer":"000000001100000001f65342","flip_offset":[4],"flip_mask":[16]}

{"msgh_bits":"0x1100","msgh_id":"0x723b","buffer":"00000000010000000900000038a1b63e00000000"}

{"msgh_bits":"0x80001112","msgh_id":"0x732f","buffer":"0000008002000000ffffff7f","ool_bits":"0x1000101","desc_count":1}

{"msgh_bits":"0x1100","msgh_id":"0x723b","buffer":"00000000010000000900000070f3a53e00000000","flip_offset":[12],"flip_mask":[2]}

{"msgh_bits":"0x80001100","msgh_id":"0x722a","buffer":"0000008002000000dfffff7f","ool_bits":"0x1000101","desc_count":1,"flip_offset":[8],"flip_mask":[32]}

...

In order for the fuzzer to be effective, the final step required us to stimulate the system to generate WindowServer message ‘traffic’. This could have been accomplished any number of ways, such as letting a user navigate around the system, or writing scripts to randomly open applications and move them around.

But through careful study of pop culture and past vulnerabilities, we decided to simply place a weight on the ‘Enter’ key:

On the macOS lockscreen, holding ‘Enter’ happens to generate a reasonable variety of message traffic to the WindowServer. When a crash occurred as a result of our bitflipping, we saved the replay log and crash state to disk.

Conveniently, when WindowServer crashes, macOS locked the machine and restarted the service… bringing us back to the lockscreen. A simple python script running in the background sees the new WindowServer instance pop up, injecting Frida to start the next round of fuzzing.

This was the lowest-effort and lowest-cost fuzzer we could have made for this target, yet it still proved fruitful.

Discovery & Root Cause Analysis

Leaving the fuzzer to run overnight, it produced a number of unique (mostly useless) crashes. Among the handful of more interesting crashes was one that looked particularly promising but would require additional investigation.

We replayed the bitflip log for that crash against a new instance of WindowServer with lldb (the default macOS debugger) attached and were able to reproduce the issue. The crashing instruction and register state depicted what looked like an Out-of-Bounds Read:

Process 77180 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = EXC_BAD_ACCESS (address=0x7fd68940f7d8)

frame #0: 0x00007fff55c6f677 SkyLight`_CGXRegisterForKey + 214

SkyLight`_CGXRegisterForKey:

-> 0x7fff55c6f677 <+214>: mov rax, qword ptr [rcx + 8*r13 + 0x8]

0x7fff55c6f67c <+219>: test rax, rax

0x7fff55c6f67f <+222>: je 0x7fff55c6f6e9 ; <+328>

0x7fff55c6f681 <+224>: xor ecx, ecx

Target 0: (WindowServer) stopped.

In the crashing context, r13 appeared to be totally invalid (very large).

Another attractive component of this crash was its proximity to a top-level \_X... function. The shallow nature of this crash implied that we would likely have direct control over the malformed field that caused the crash.

(lldb) bt

* thread #1, queue = 'com.apple.main-thread', stop reason = EXC_BAD_ACCESS (address=0x7fd68940f7d8)

* frame #0: 0x00007fff55c6f677 SkyLight`_CGXRegisterForKey + 214

frame #1: 0x00007fff55c28fae SkyLight`_XRegisterForKey + 40

frame #2: 0x00007ffee2577232

frame #3: 0x00007fff55df7a57 SkyLight`CGXHandleMessage + 107

frame #4: 0x00007fff55da43bf SkyLight`connectionHandler + 212

frame #5: 0x00007fff55e37f21 SkyLight`post_port_data + 235

frame #6: 0x00007fff55e37bfd SkyLight`run_one_server_pass + 949

frame #7: 0x00007fff55e377d3 SkyLight`CGXRunOneServicesPass + 460

frame #8: 0x00007fff55e382b9 SkyLight`SLXServer + 832

frame #9: 0x0000000109682dde WindowServer`_mh_execute_header + 3550

frame #10: 0x00007fff5bc38115 libdyld.dylib`start + 1

frame #11: 0x00007fff5bc38115 libdyld.dylib`start + 1

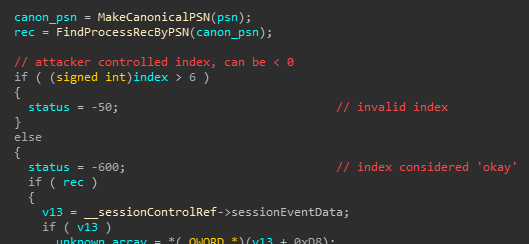

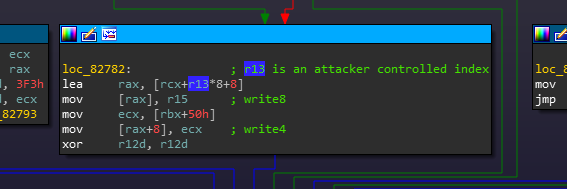

Root cause analysis to identify the bug responsible for this crash took only minutes. Directly prior to the crash was a signed/unsigned comparison issue within _CGXRegisterForKey(...):

WindowServer tries to ensure that the user-controlled index parameter is six or less. However, this check is implemented as a signed-integer comparison. This means that supplying a negative number of any size (eg, -100000) will incorrectly get us past the check.

Our ‘fuzzed’ index was a 32bit field in the mach message for _XRegisterForKey(...). The bit our fuzzer flipped happened to be the uppermost bit, changing the number to a massive negative value:

HEX | BINARY | DECIMAL

----------+----------------------------------+-------------

BEFORE: 0x0000005 | 00000000000000000000000000000101 | 5

AFTER: 0x8000005 | 10000000000000000000000000000101 | -2147483643

^

|- Corrupted bit

Assuming we can get the currently crashing read to succeed through careful indexing to valid memory, there are a few minor constraints between us and what looks like an exploitable write later in the function:

Under the right conditions, this bug appears to be an Out-of-Bounds Write! Any vulnerability that allows for memory corruption (a write) is generally categorized as an exploitable condition (until proven otherwise). This vulnerability has since been fixed as CVE-2018-4193.

In the next post, we provide a standalone PoC to trigger this crash and detail the constraints that make this bug rather difficult to exploit while developing our full Safari sandbox escape exploit against the WindowServer.

Conclusion

Escaping a software sandbox is a necessary step towards total system compromise when exploiting modern browsers. We used this post to discuss the value of sandboxing technology, the standard methodology to escape one, and our approach towards evaluating the Safari sandbox for a means of escaping it.

By reviewing existing resources, we devised a strategy to tackle the Safari sandbox and fuzz a historically problematic component (WindowServer) with a very simple in-process fuzzer. Our process demonstrates nothing novel, and that even contrived fuzzers are still able to find critical, real-world bugs.