Dangers of the Decompiler

A Sampling of Anti-Decompilation Techniques

Traditional (assembly level) reverse engineering of software is a tedious process that has been made far more accessible by modern day decompilers. Operating only on compiled machine code, a decompiler attempts to recover an approximate source level representation.

'... and I resisted the temptation, for years. But, I knew that, if I just pressed that button ...' --Dr. Mann (Interstellar, 2014)

There’s no denying it: the science and convenience behind a decompiler-backed disassembler is awesome. At the press of a button, a complete novice can translate obscure ‘machine code’ into human readable source and engage in the reverse engineering process.

The reality is that researchers are growing dependent on these technologies too, leaving us quite exposed to their imperfections. In this post we’ll explore a few anti-decompilation techniques to disrupt or purposefully mislead decompiler-dependent reverse engineers.

Positive SP Value

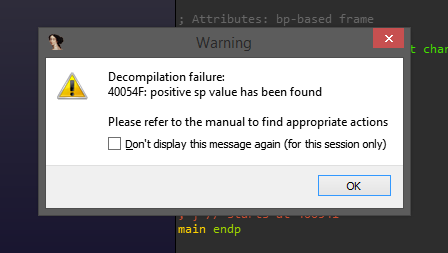

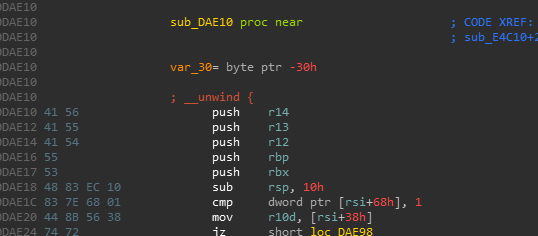

The first technique is a classic, but ‘noisy’, method of disrupting the Hex-Rays decompiler. In IDA Pro, the decompiler will refuse to decompile a function if it does not clean up its stack allocations (balancing the stack pointer) prior to returning.

This happens occasionally (innocently) when IDA cannot reasonably devise the type definition of certain function calls.

As an anti-decompilation technique, a developer can elicit this behavior in a function they would like to ‘hide’ by using an opaque predicate that disrupts the balance of the stack pointer.

//

// compiled on Ubuntu 16.04 with:

// gcc -o predicate predicate.c -masm=intel

//

#include <stdio.h>

#define positive_sp_predicate \

__asm__ (" push rax \n"\

" xor eax, eax \n"\

" jz opaque \n"\

" add rsp, 4 \n"\

"opaque: \n"\

" pop rax \n");

void protected()

{

positive_sp_predicate;

puts("Can't decompile this function");

}

void main()

{

protected();

}

The instruction add rsp, 4 in the positive_sp_predicate macro defined above can never get executed at runtime, but it will trip up the static analysis that IDA performs for decompilation. Attempting to decompile the protected() function generated by the provided source yields the following result:

This technique is relatively well known. It can be fixed via patching, or correcting the stack offset by hand.

In the past I’ve used this technique as a simple stopgap to thwart novice reverse engineers (eg, students) from skipping the disassembly and going straight to decompiler output.

Return Hijacking

An aspiration of modern decompilers is to accurately identify and abstract away low-level bookkeeping logic that compilers generate, such as function prologues/epilogues or control flow metadata.

Compiler generated function prologues will typically save registers, allocate space for the stack frame, etc

Decompilers strive to omit this kind of information from their output because the concepts of saving registers, or managing stack frame allocation do not exist at the source level.

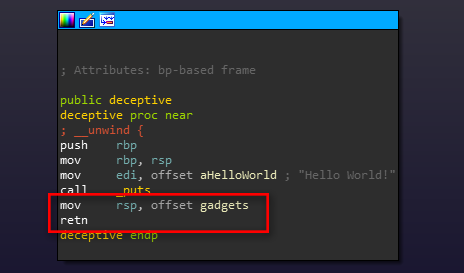

An interesting artifact of these omissions (or perhaps a gap in the Hex-Rays decompiler heuristics) is that we can ‘pivot’ the stack just prior to returning from a function without the decompiler throwing a warning or presenting any indication of foul play.

Stack pivoting is a technique commonly used in binary exploitation to achieve arbitrary ROP. In this case, we (as developers) use it as a mechanism to hijack execution right out from underneath an unsuspecting reverse engineer. Those focused solely on decompiler output are guaranteed to miss it.

We pivot the stack onto a tiny ROP chain that has been compiled into the binary for this exercise of misdirection. The end result is a function call that is ‘invisible’ to the decompiler. Our discretely called function simply prints out ‘Evil Code’ to prove that it was executed.

The code used to demonstrate this technique of hiding code from the decompiler can be found below.

//

// compiled on Ubuntu 16.04 with:

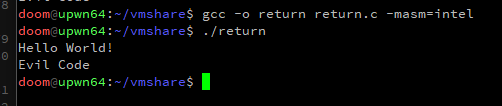

// gcc -o return return.c -masm=intel

//

#include <stdio.h>

void evil() {

puts("Evil Code");

}

extern void gadget();

__asm__ (".global gadget \n"

"gadget: \n"

" pop rax \n"

" mov rsp, rbp \n"

" call rax \n"

" pop rbp \n"

" ret \n");

void * gadgets[] = {gadget, evil};

void deceptive() {

puts("Hello World!");

__asm__("mov rsp, %0;\n"

"ret"

:

:"i" (gadgets));

}

void main() {

deceptive();

}

Abusing ‘noreturn’ Functions

The last technique we’ll cover exploits IDA’s perception of functions that are automatically labeled as noreturn. Some everyday examples of noreturn functions would be exit(), or abort() from the standard libraries.

While generating the pseudocode for a given function, the decompiler will discard any code after a call to a noreturn function. The expectation is that in no universe should a function like exit() ever return and continue executing code.

If one can trick IDA into believing a function is noreturn when it actually isn’t, a malicious actor can quietly hide code behind any calls made to it. The following example demonstrates one of many ways we can achieve this result.

//

// compiled on Ubuntu 16.04 with:

// gcc -o noreturn noreturn.c

//

#include <stdio.h>

#include <stdlib.h>

void ignore() {

exit(0); // force a PLT/GOT entry for exit()

}

void deceptive() {

puts("Hello World!");

srand(0); // post-processing will swap srand() <--> exit()

puts("Evil Code");

}

void main() {

deceptive();

}

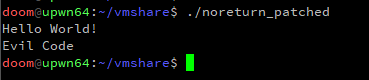

By compiling the code above and running a short Binary Ninja based post-processing script against the resulting binary, we can swap the pushed ordinal numbers in the Procedure Linkage Table. These indexes are used to when resolving library imports at runtime.

In this example we swap the ordinals for srand() with exit() and doctor some calls for compile-time convenience. As a result, IDA believes the deceptive() function in the modified binary is calling exit(), a noreturn function, instead of srand().

The exit() call we see in IDA is in-fact srand() (effectively a no-op) at runtime. The effect on the decompiler is almost identical to the return hijacking technique covered in the previous section. Running the binary demonstrates that our ‘Evil Code’ is getting executed, unbeknownst to the decompiler.

While the presence of malicious code is blatant in these examples, hiding these techniques within larger functions and complex conditionals makes them exceptionally easy to glaze over.

Conclusion

Decompilers are an impressive but imperfect technology. They operate on incomplete information and do their best to approximate for us humans. Malicious actors can (and will) leverage these asymmetries as a means of deception.

As the industry grows more reliant on the luxuries of today’s decompilers, the adoption of anti-decompilation techniques will increase and evolve in the same vein as anti-debugging and anti-reversing have.